Apache Flume - Get logs out of RabbitMQ and into HDFS

This post is an extension of Tutorial 12 from Hortonworks (original here), which shows how to use Apache Flume to consume entries from a log file and put them into HDFS.

One of the problems that I see with the Hortonworks sandbox tutorials (and don’t get me wrong, I think they are great) is the assumption that you already have data loaded into your cluster, or they demonstrate an unrealistic way of loading data into your cluster - uploading a csv file through your web browser. One of the exceptions to this is tutorial 12, which shows how to use Apache Flume to monitor a log file and insert the contents into HDFS.

In this post I’m going to further extend the original tutorial to show how to use Apache Flume to read log entries from a RabbitMQ queue.

Apache Flume is described by the folk at Hortonworks as:

Apache™ Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of streaming data into the Hadoop Distributed File System (HDFS). It has a simple and flexible architecture based on streaming data flows; and is robust and fault tolerant with tunable reliability mechanisms for failover and recovery.

…continue reading about Apache Flume over on the Hortonworks website.

Overview

In this article I will cover off the following:

- Installation and Configuration of Flume

- Generating fake server logs into RabbitMQ

To follow along you will need to:

- Download tutorial files

- Download and configure the Hortonworks Sandbox

- Have a RabbitMQ broker running, and accessible to the sandbox

- Have Python 2.7 installed

Installing Flume

WIth the Sandbox up and running, press Alt and F5 to bring up the login screen. You can login using the default credentials:

login: root password: hadoop

After you’ve logged in type:

yum install -y flume

You should now see the installation progressing until it says Complete!

For more details on installation take a look at Tutorial 12 from Hortonworks.

Using the flume.conf file that is part of my tutorial files, follow the instructions to upload it into the sandbox from the tutorial. Before uploading the file, you should check that the RabbitMQ configuration matches your system:

sandbox.sources.rabbitmq_source1.hostname = 192.168.56.65 sandbox.sources.rabbitmq_source1.queuename = logs sandbox.sources.rabbitmq_source1.username = guest sandbox.sources.rabbitmq_source1.password = guest sandbox.sources.rabbitmq_source1.port = 5672 sandbox.sources.rabbitmq_source1.virtualhost = logs

You shouldn’t need to change anything else.

For Flume to be able to consume from a RabbitMQ queue I created a new plugins directory and then upload the Flume-ng RabbitMQ library.

Creating the required directories can be done from the Sandbox console with the following command:

mkdir /usr/lib/flume/plugins.d mkdir /usr/lib/flume/plugins.d/flume-rabbitmq mkdir /usr/lib/flume/plugins.d/flume-rabbitmq/lib

Once these directories have been created, upload the flume-rabbitmq-channel-1.0-SNAPSHOT.jar file into the lib directory.

Starting Flume

From the Sandbox console, execute the following command

flume-ng agent -c /etc/flume/conf -f /etc/flume/conf/flume.conf -n sandbox

Generate server logs into RabbitMQ

To generate log entries I took the original python script (which appended entries to the end of a log file), and modified it to publish log entries to RabbitMQ.

To run the python script you will need to follow the instructions on the RabbitMQ site to install the pika client library (see details on the RabbitMQ website).

The script is setup to connect to a broker on the localhost into a virtual host called “logs”. You will need to make sure that the virtual host exists.

You can start the script by running:

python.exe c:\path\to\generate_logs.py

When this is started the script will declare an exchange and queue and then start publishing log entries.

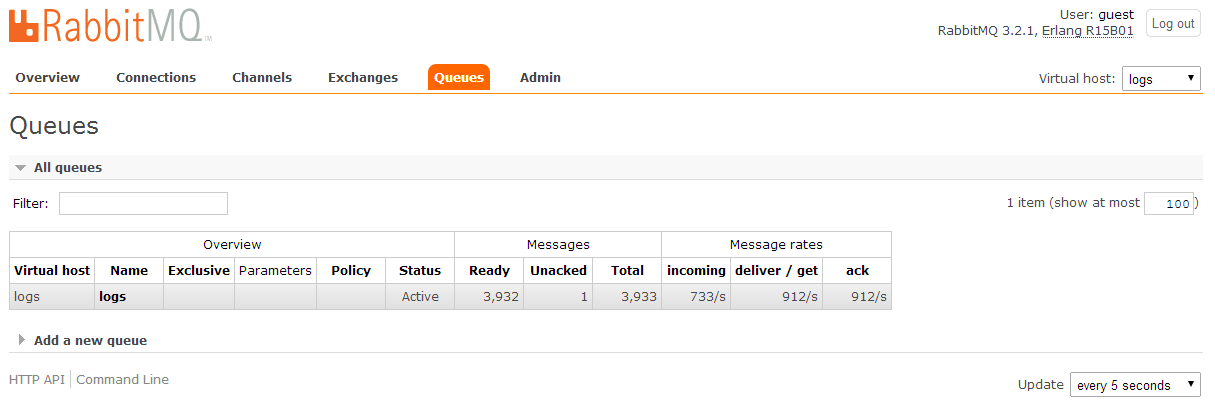

You can see that everything is running by going over the RabbitMQ Management console.

The incoming messages are the ones being generated by the Python script and the deliver / get and ack messages are the ones being consumed by Flume.

The incoming messages are the ones being generated by the Python script and the deliver / get and ack messages are the ones being consumed by Flume.

Setting up HCatalog

The following command (from the original tutorial) can be used to create the HCatalog table (make sure you only enter it only on a single line):

hcat -e “CREATE TABLE firewall_logs (time STRING, ip STRING, country STRING, status STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ‘|’ LOCATION ‘/flume/rab_events’;”

You should now be able to browse this table from the web interface.

To do some analysis on this data you can now follow steps 5 and 6 from the original tutorial.

🍪 I use Disqus for comments

Because Disqus requires cookies this site doesn't automatically load comments.

I don't mind about cookies - Show me the comments from now on (and set a cookie to remember my preference)