Getting Started with the Hortonworks Sandbox

In my previous post, I made reference to the Twitter Big Data example for Microsoft StreamInsight (project page).

The sample collects tweets in real-time from Twitter then does a few things:

- Displays current information about trends in real-time on a web dashboard

- Stores information about the tweets into a SQL Azure database

- Store the actual tweets into an Azure Blob Store

Then there are some commands that you can use with Azure HDInsight to do some post-processing, this is great if you have access to the HDInsight Preview, but what if you are stuck on the waiting list? That’s where the Hortonworks Sandbox comes in!

In this post, I’m going to give an overview of getting the sandbox setup and then how to move the data collected by StreamInsight from Azure into the Sandbox. In the next post I will be showing how to do the analysis.

Getting Started with the Sandbox

You will need to download:

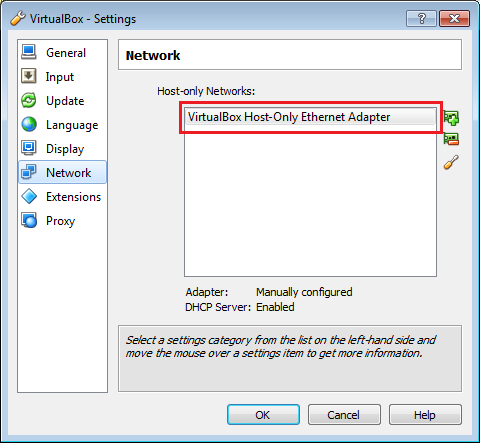

Once VirtualBox has been installed, ensure that there is a host-only network configured.

To do this, go to File -> Preferences then click “Network”. You should see an entry in the Host-only Networks:

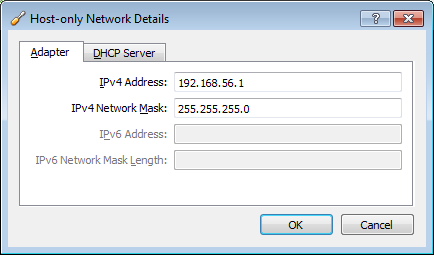

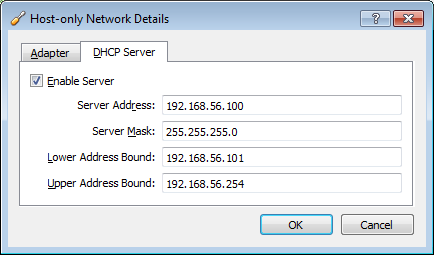

Click on the screw-driver icon to edit the entry, and ensure that the adapter settings match the following:

Once the network is correctly configured, click OK until all the windows are closed.

The next step is to import the sandbox application. From the VirtualBox Manager, click File -> Import Applicance, this will bring up the import wizard.

On the first page click the Open appliance button, and browse to where you downloaded the sandbox. Click Next and you will see the “Appliance settings” page, you shouldn’t have to change any details.

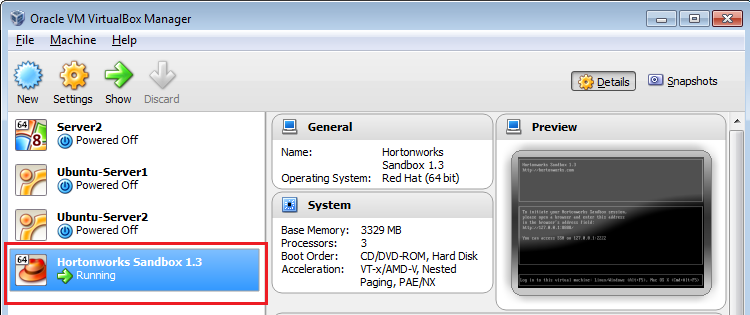

Click Import, you will see a progress window appear. Once it has completed, you will see it in the list of servers:

Configuring the Sandbox

The next step is to configure the network adapters.

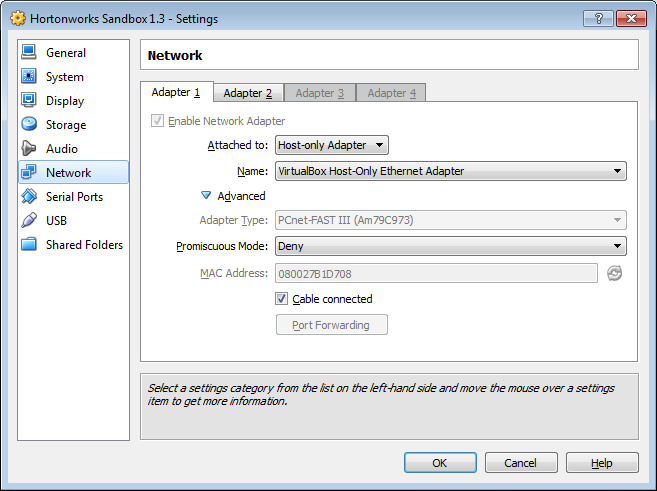

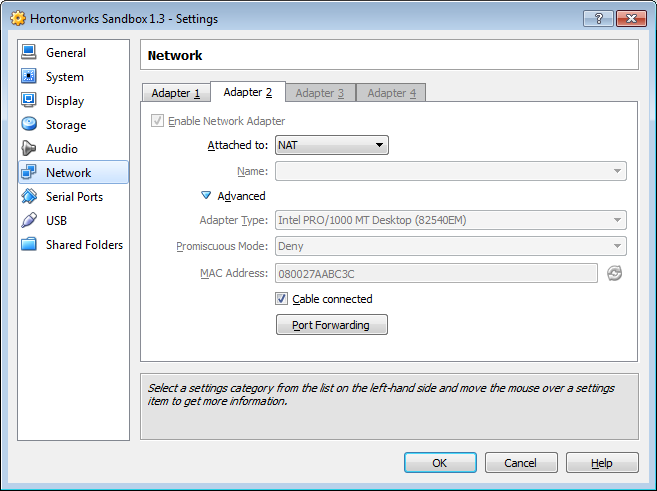

Right click on the Hortonworks Sandbox, and click Settings. Then click Network to bring up the Network Configuration.

I configured my adapters like this:

The Sandbox is now configured.

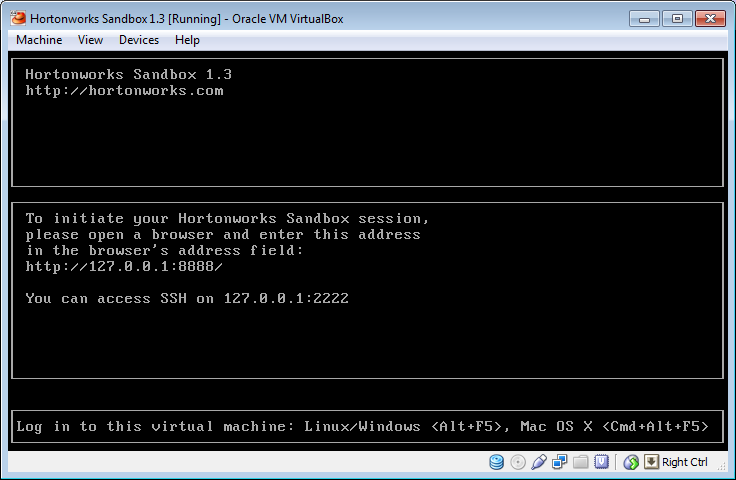

You can now boot the sandbox. Right click in the VirtualBox Manager and click Start. This will boot up the Sandbox, after a minute or two you will see the following:

This is where I was caught out, from looking at this window you don’t know what IP address you should use to browse to the Sandbox. By configuring the host-only network, you can browse to the sandbox on 192.168.56.101.

You will be asked to fill in some details about yourself then you’re good to go.

Adding the SQL Server driver for Apache Sqoop

Apache Sqoop is a tool designed for efficiently transferring bulk data between Hadoop and structured datastores such as relational databases.

In the Twitter Big Data sample, SQL Azure is used to store information about the Tweets that it collects. The Sandbox when first setup is missing the driver for SQL Server, to add it follow these steps:

- Find the JDBC drivers for MS SQL on Microsoft, currently sqljdbc_4.0.2206.100_enu.tar.gz

- Using Putty, SSH onto the sandbox and logon as root with password hadoop

- Issue the following command to download the driver: wget http://download.microsoft.com/download/0/2/A/02AAE597-3865-456C-AE7F-613F99F850A8/sqljdbc_4.0.2206.100_enu.tar.gz

- Unzip using this command: gunzip sqljdbc_4.0.2206.100_enu.tar.gz

- Extract tar: tar –xvf sqljdbc_4.0.2206.100_enu.tar

- Copy to the Sqoop lib directory: cp sqljdbc_4.0/enu/sqljdbc4.jar /usr/lib/sqoop/lib/

This now covers off all the configuration and setup of the sandbox.

Setting up the Twitter Big Data Solution

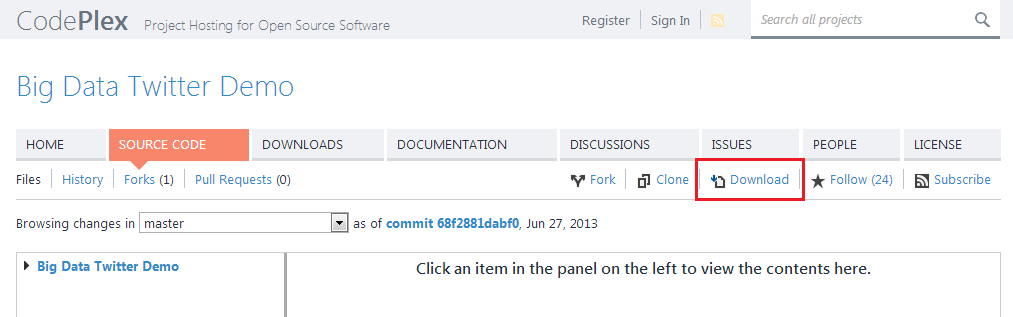

The Twitter Big Data solution can be downloaded from the codeplex site project site, by clicking on Source Code, then Download.

You will need to have StreamInsight installed and running on your development machine, installation instructions can be found here.

The Twitter Big Data solution comes with a README file which outlines various steps that you need to go through to configure the solution. You will need to fill in the app.config file with details of your SQL Azure database and Blob store. Once that is done you can run the solution and you should see data in the web dashboard, at the same time records will be going into your blob store and SQL Azure database.

Once there is some data in the SQL Azure database and the Blob Store, we can use various components of the Hortonworks Sandbox to pull data from Azure into Hadoop.

The remainder of this post is basically going to be a re-write of the instructions found in the README file of the solution.

Moving data from SQL Azure to Hadoop using Sqoop

Sqoop is a component in the sandbox which connects to different data sources and moves it into HDFS (hadoop distributed file system).

I wasn’t able to get Sqoop working with SQL Azure via the nice UI provided by the sandbox, so instead used SSH. So you will need to use Putty or any other SSH client to complete this stage.

- Connect to the sandbox via SSH, logon using user: root, password: hadoop

- Execute the following command:

sqoop import –connect “jdbc:sqlserver://sql_azure_server_name_here.database.windows.net;username=your_sql_azure_username_here@sql_azure_server_name_here;password=your_sql_azure_password_here;database=your_db_name_here” –table TweetInfo –hive-import -hive-overwrite

Sqoop will now start moving data from your SQL Azure database into the sandbox. This shouldn’t take more than a few minutes, depending on how much data you have in the SQL Azure database and your connection speed.

Moving data from Azure Blob Storage into HDFS

The nice thing about HDInsight in Azure is that it can be configured to use Azure Storage Vault, which basically means you can map the file system of your hadoop system to Azure blob storage. Unfortunately, there is no straightforward way that I could find (if I’m wrong please let me know!) to connect the sandbox to Azure blob storage.

In the README file, the author has you map Hadoop on Azure (HDInsight) to Azure Storage Vault (ASV), because I couldn’t figure out a good way to do this, what I ended up doing was a bit clunky.

I used the Azure Storage Explorer to download all the blobs from the container, which I specified in the app.config, into a directory. I then zipped all of these files and then uploaded this zip file into the Sandbox.

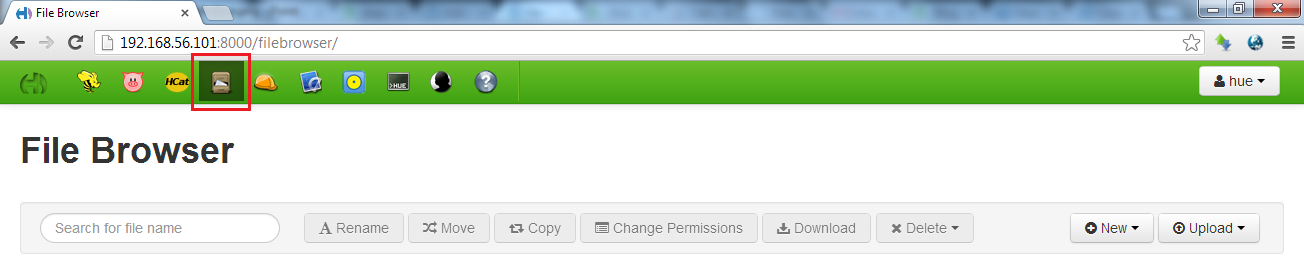

Uploading the zip file to the Sandbox is very easy. With the Sandbox open in your web browser, click the File Browser icon:

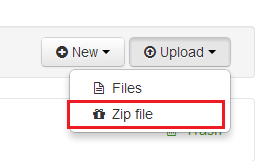

Then click Upload -> Zip File:

From here select the zip file with all your tweets. This will upload the file, then extract all the files into a directory of the same name as the zip file.

Processing the Tweets using Hive

There is a script included in the solution called “Analyze Tweets with Hive.txt”. I have made some small modifications to it, which you can download here. If you compare this file to the original you will notice that the first two configuration lines have been removed as well as some of the lines which were commented out. Also, the location for the raw_tweets table has been updated.

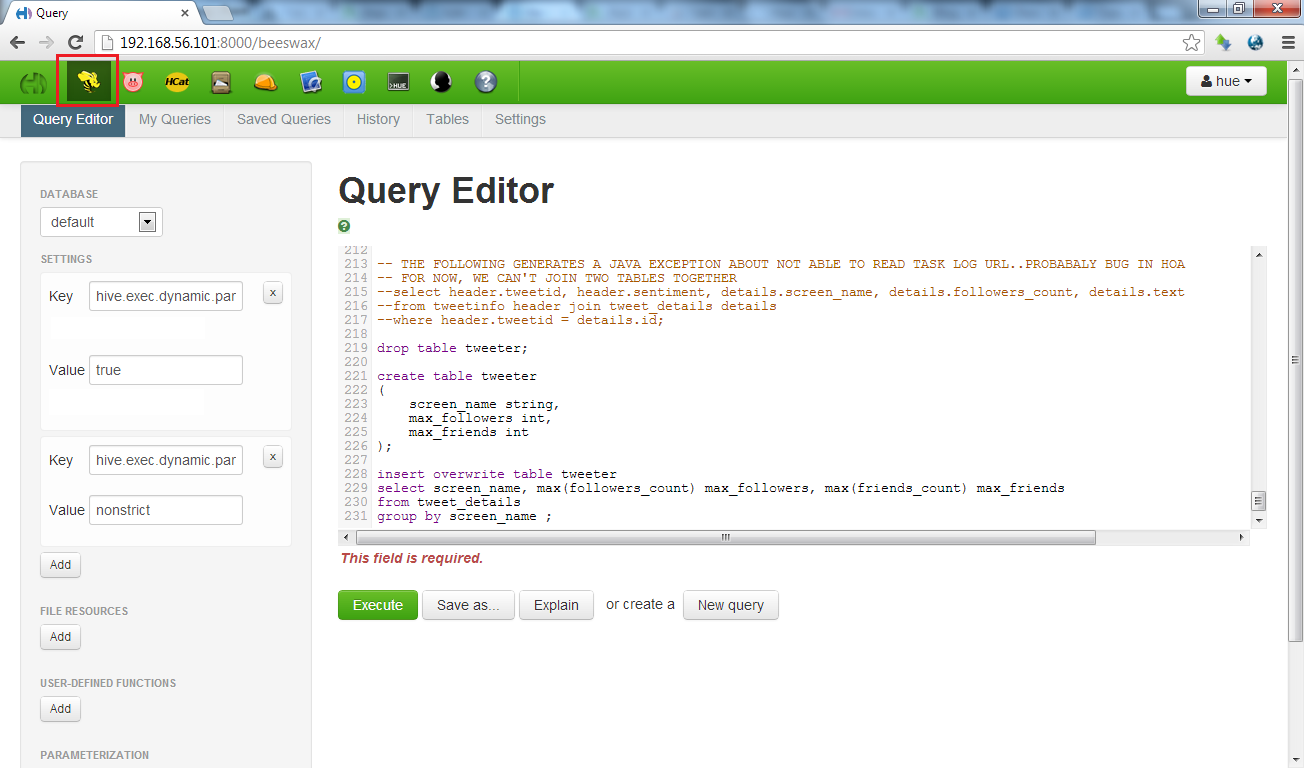

To process this script using the Sanbox, in your browser:

- Click on the Beeswax icon, this is the Hive UI

- In the Settings section, add the following settings:

- key: hive.exec.dynamic.partition value: true

- key: hive.exec.dynamic.partition.mode value: nonstrict

- Open the modified script, and copy and paste the contents into the query editor

Click Execute to start the script

This will then start processing the raw tweets that were stored in the Azure blob storage, which we moved into HDFS.

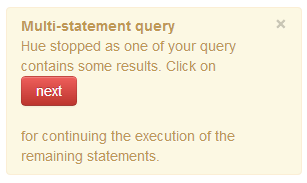

This will take longer to execute depending on how many tweets were collected. Unfortunately you can’t leave this unattended, as you will see the following come up:

You will need to click Next to continue processing.

Installing the Hive ODBC Driver

To analyse the tweets using Excel you will need to install the ODBC Driver from Hortonworks, which can be found here.

You will need to make sure you install the version that corresponds to the version of Excel you have installed (32bit / 64bit).

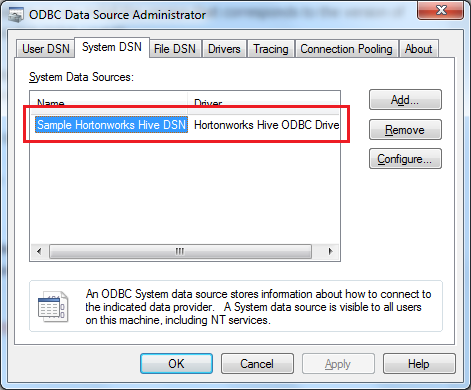

After you have installed the driver, open Administrative Tools. Then open ODBC Data Sources 32 bit / 64 bit.

Click System DNS tab, and you should see:

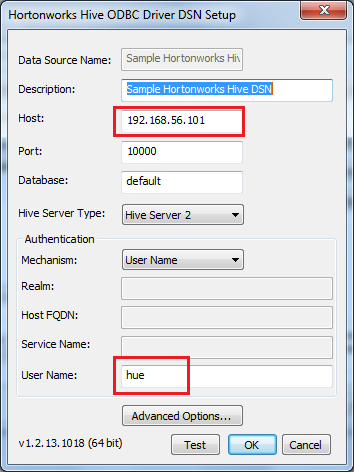

Click on Configure, here you will need to fill in the host and user name fields:

At this point you now have all the Tweet information that was generated by the StreamInsight application in the Sandbox, you have the ODBC Driver configured and setup.

In the next post I will show how to analyse this information.

🍪 I use Disqus for comments

Because Disqus requires cookies this site doesn't automatically load comments.

I don't mind about cookies - Show me the comments from now on (and set a cookie to remember my preference)