Load Files into HDFS using SQL Server Integration Services

UPDATE: I’ve made a few changes to how the component works -

Read about it here.

Recently I have been playing around with the Hortonworks Big Data sandbox, the tutorials were very good, and made it easy to get going with some sample data.

Given that I mainly use the Microsoft BI stack I was hoping for the ability to use SSIS to load my data, especially as it would be nice down the line to do this as part of an ETL process. However, Microsoft as yet don’t have first class support for HDFS as a destination.

So to fix this problem I decided to build a custom destination for SSIS that would load data into HDFS.

My goal was to be able to transfer a bunch of files from my filesystem into HDFS.

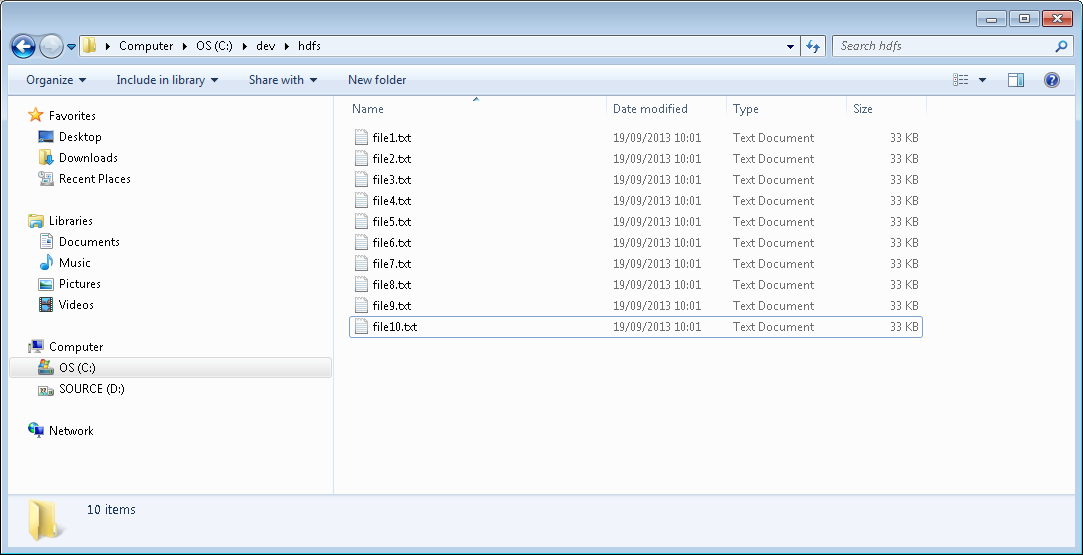

This is my directory of files

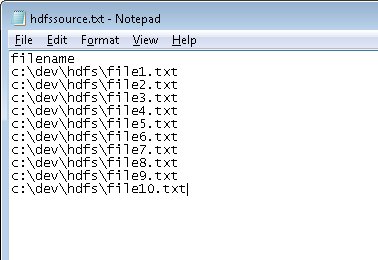

The way my custom destination currently works is by sending a list of filenames into it, these files will then be transferred into HDFS. Here is my source file with a list of files to upload.

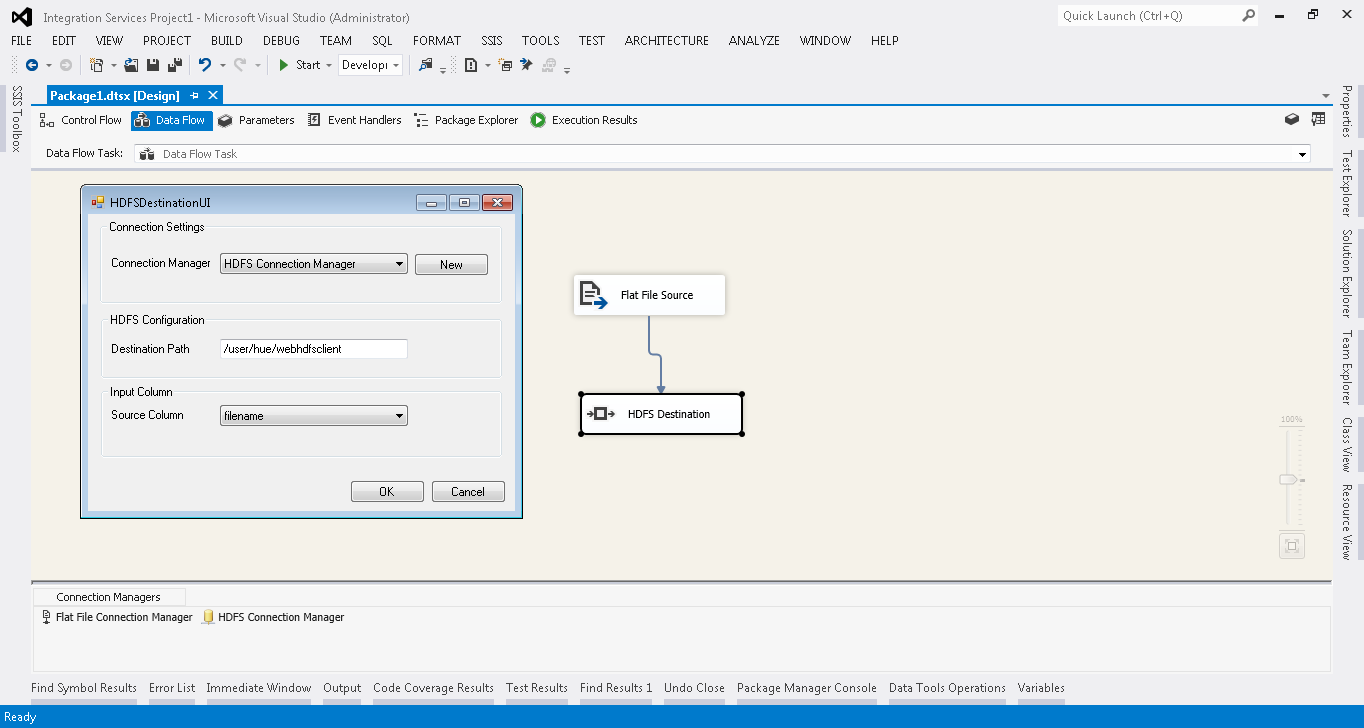

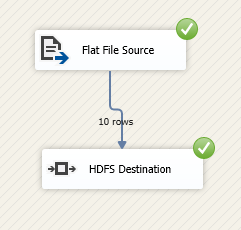

Next up is the SSIS package. As you can see below, the package is very simple. There is a flat file source, which I’ve setup to read from the source file above, it then feeds into the HDFS Destination.

The HDFS Destination has three parts to configure:

- Configuration Manager

- Destination Path - this will be the path in HDFS to upload the files to (I had to change the permissions so all groups had write access)

- Source column - this is the column from the flat file source to read the filenames from

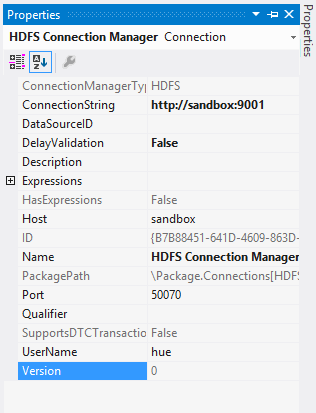

The HDFS Configuration Manager is setup with a hostname and port, I’ve set mine to “sandbox” and 50070. Where sandbox is setup in my hosts file to point to my Hortonworks sandbox (192.168.56.101).

After all this has been configured the SSIS package can be run.

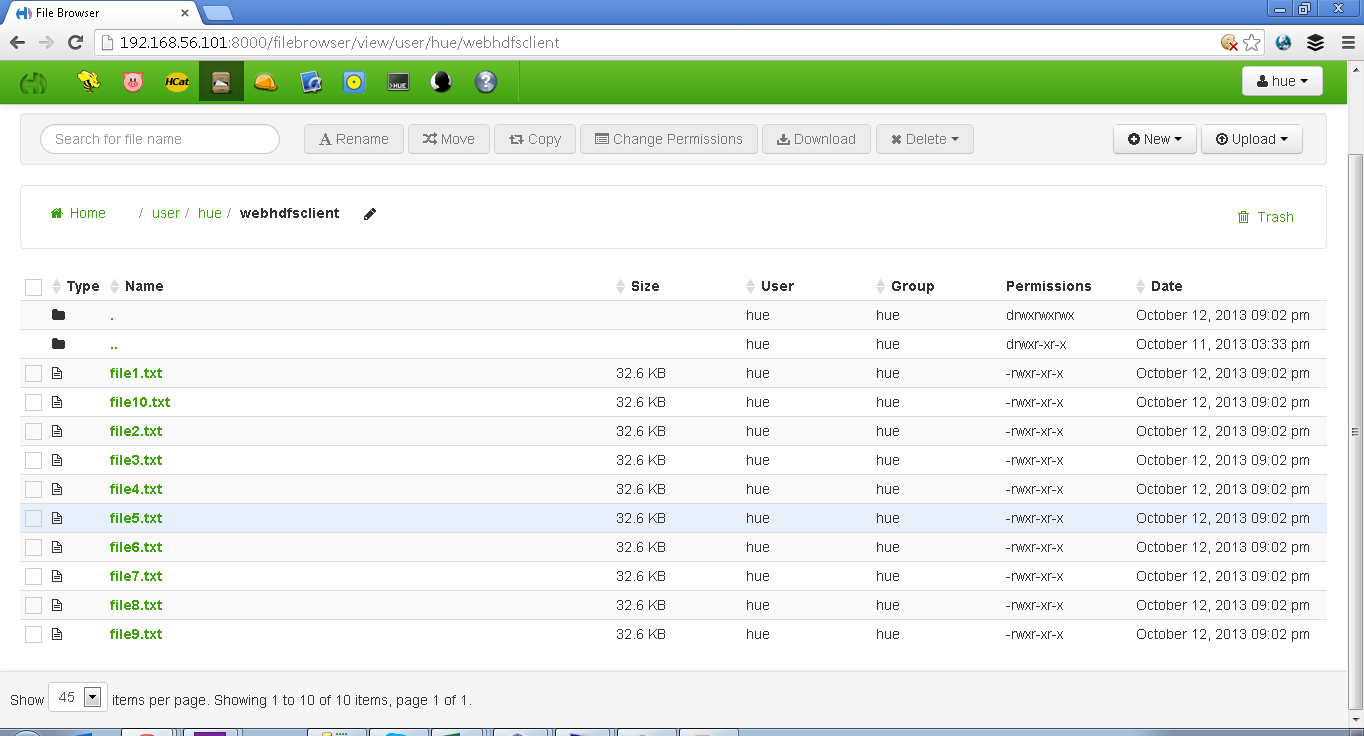

Now, to check HDFS

All the files have been uploaded into HDFS.

The custom component uses the Microsoft Hadoop Webclient, which is part of the Microsoft Hadoop .Net SDK. The code is available on GitHub.

That wraps up the first incarnation of my HDFS Destination. I’m planning to further extend it, so that rather than sending in a list of filenames to upload you will be able to send in string data which will then be transferred as files into HDFS.

🍪 I use Disqus for comments

Because Disqus requires cookies this site doesn't automatically load comments.

I don't mind about cookies - Show me the comments from now on (and set a cookie to remember my preference)