Performance tuning stored procedures - when indexes aren't the problem

Recently I have been doing performance tuning on a few stored procedures that power some of the most important parts of DrDoctor. Whilst I’m confident to say I can read query execution plans much better now that I could 6 months ago that isn’t the biggest takeaway. What I’ve noticed with two stored procedures in particular is that the reason they are running slowly is because they were both reading lots and lots of data from one or two tables which was then slowly whittled away into practically nothing.

Take the most recent stored procedure I had to tune. This stored procedure finds slots for patients to book into for their up and coming hospital appointment.

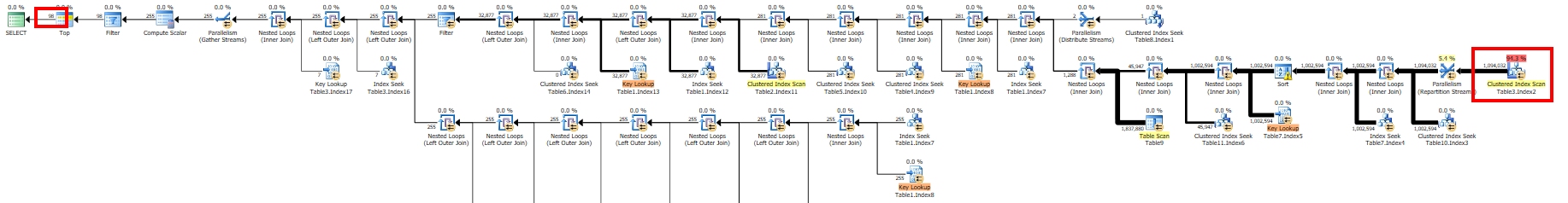

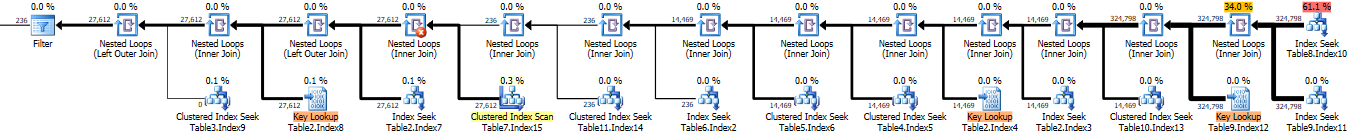

As you can see the final output of the query is 98 rows, yet if you look at the far right the highlighted table which contains the slots selects out over 1 million rows, which are eventually reduced down to pretty much nothing.

In this post I’m going to show how after making a few minor changes this stored procedure went from taking just over 30 seconds to finishing in under 1 second!

Tuning the performance

The first thing that I did was look at the execution plan, my initial observations were:

- Final row count is 98

- Selecting over 1 million rows from the slots table - this just feels wrong!

- What is that sort operation doing in there

- Looks like there are a few big and nasty key lookups

Fix attempt 1: I added a new non-clustered index to the slots table to try and reduce the amount of data that is being pulled back, this didn’t work however it did remove the sort operator.

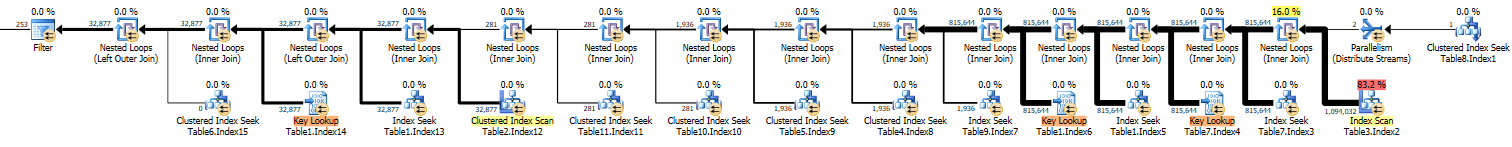

You can see from the above that we are still selecting out over a million rows from the slots table. It’s now dawning on me that the indexes isn’t really the problem, but rather it’s the query that needs to be addressed.

Looking more closely: In the query we have a bunch of INNER JOINs one of which joins onto the slots table, in the WHERE clause of the query we do some date filtering on the time range of the slots based on data from another table. What this means is that SQL Server selects out all the slots in this table and then carries all this data around until finally it filters the data down.

Fix attempt 2: Adding date filtering to the INNER JOIN predicate on the slots table to only select future slots

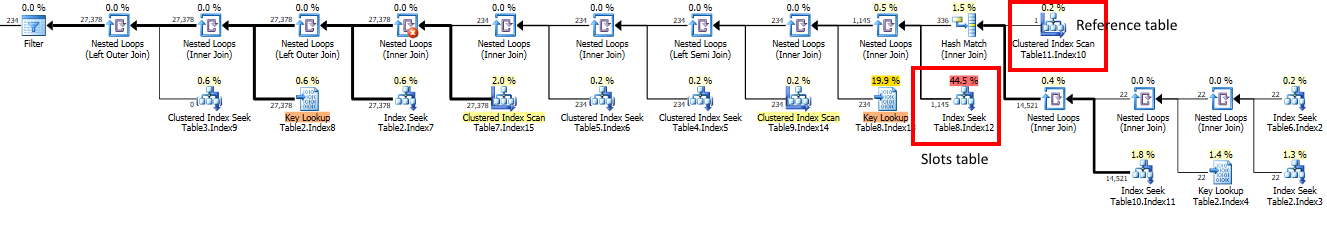

This change has made a noticeable difference as now only ~600,000 rows are being returned. This is a move in the right direction, but I’m sure that more changes could be made.

Looking more closely: Having made some progress in the right direction I went back to the query. I know the solution is to filter that slots table down right at the source, so I started looking for more ways to do that. The query currently has a CTE that selects a row of data about the appointment that is being booked and then this CTE is joined to the main query.

Fix attempt 3: I changed the CTE to join to a configuration table and find for me the MIN/MAX range of slots that should be selected, then instead of joining the CTE to the main query I declared a bunch of variables and used the CTE to give them their values. Then I removed the CTE from the main query and used the variables, I also then changed the slots INNER JOIN to select slots based on the date range using the MIN/MAX calculated previously.

As you can see this is still moving in the right direction, the number of rows being returned now is down to 324,000 - still a lot, but significantly less than the million I started with!

Looking more closely: I now started to look more broadly to see if it was possible to reduce the 324,000 rows earlier in the query plan. When deciding if a slot is suitable we have to look at the “session instance” (hospital lingo) and make sure that it is “allowed to be booked”, this involves a join to a reference table.

Fix attempt 4: This time I changed the session instance INNER JOIN predicate to also say where the TypeId IN (SELECT TypeId FROM TypeTable WHERE AFlag = 1)

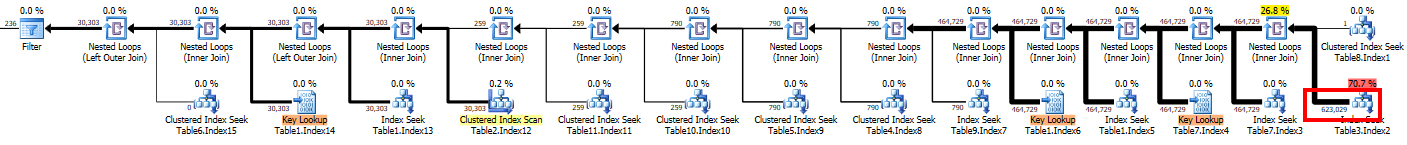

This hasn’t reduced the amount of slots rows that were being selected but it did mean that SQL Server was carrying them around in memory for less time - a good thing! (This is obvious if you compare this query plan to the one above)

Looking more closely: I’m now increasing in confidence and sure that there is more room for improvement. Looking back at the query I see that we also do some filtering on the slots table based on a booking status type, which again involves a join to a reference table

Fix attempt 5: Similar to fix attempt 4 I updated the slot table INNER JOIN predicate

Nailed it! Take a look at the query plan above, the SQL Server optimizer is now clever enough to find that there are only 336 session instances, it then does a Nested Loops operator with these 336 rows against the slots table to return 1,145 slots rows! This down from 1,094,032 rows in the first execution plan!

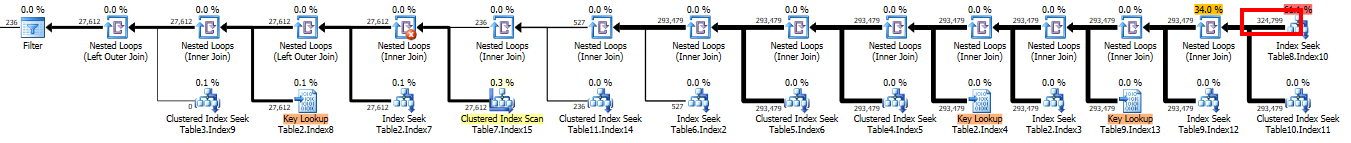

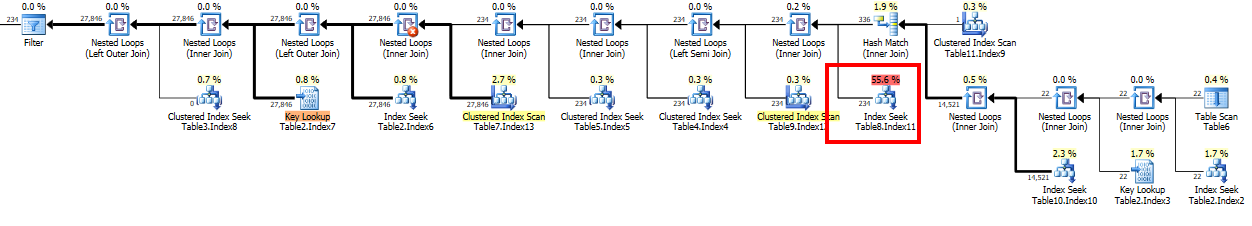

Looking more closely: I haven’t finished with this query yet, looking back at the execution plan I can see that when SQL Server does the key lookup on the slots table it only finds 234 slots that match the final condition.

Fix attempt 6: I added a new non-clustered index on the columns SQL wants to filter on and includes the others it needs to return.

With the non-clustered index added when SQL Server goes to the slots table it only returns 234 rows.

The query now finishes in under a second! What a huge improvement. There’s probably more room for improvement, but at this point I’m pretty happy with the improvement.

Reduce the data, then look at Indexes

As you can see from the above working out of my thoughts indexes weren’t the actual problem with the query. The problem was that too much data was being selected out of a very large table. With a little bit of work (this took me about 2.5 hours) I was able to reduce the number of rows from 1,094,032 down to 234!

In the end an index did help, but arguably the query probably performance just as well without an additional non-clustered index.

🍪 I use Disqus for comments

Because Disqus requires cookies this site doesn't automatically load comments.

I don't mind about cookies - Show me the comments from now on (and set a cookie to remember my preference)